We’re particularly excited to tell you about Release 5.3! Yes, it took a little longer than expected, but it’s by far the biggest release we’ve ever produced. It brings support for dbt on Snowflake, generic workflow code, Matillion ETL for Databricks and a ton of new capabilities for our business templates, such as multi-level looping, new condition types and signature objects.

dbt support, generic workflows, Matillion for Databricks and new template studio capabilities (Release 5.3)

February 6th, 2023

“VaultSpeed enables users to build their Data Vault model the right way and generates all necessary code for you. dbt acts as a platform to store, version and run these modular SQL scripts.”

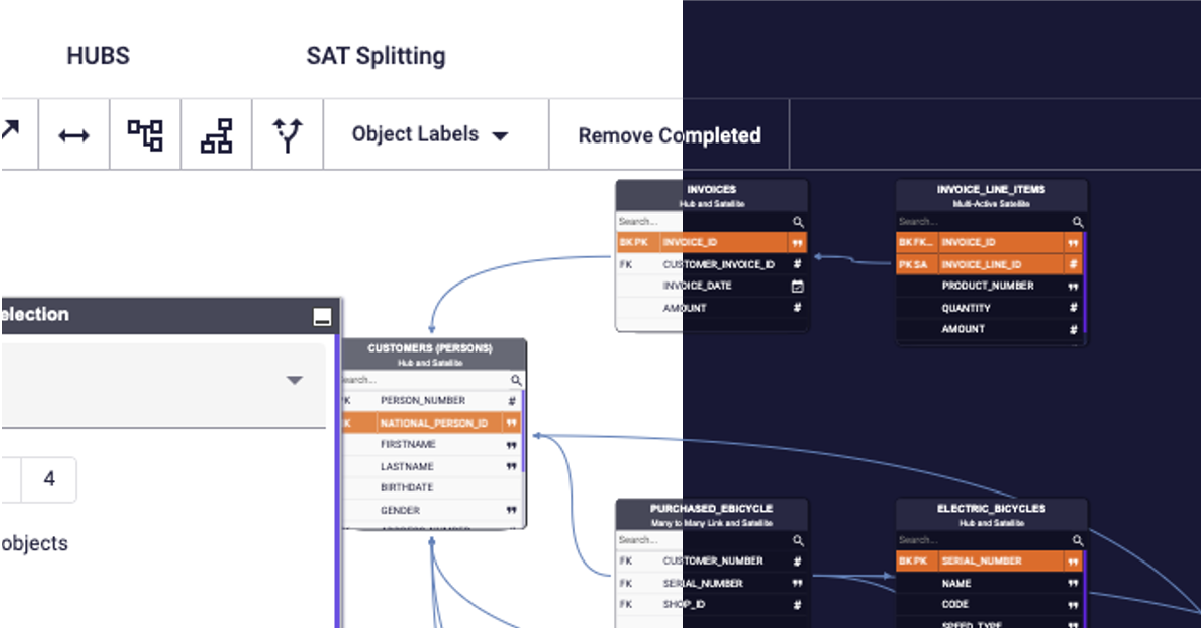

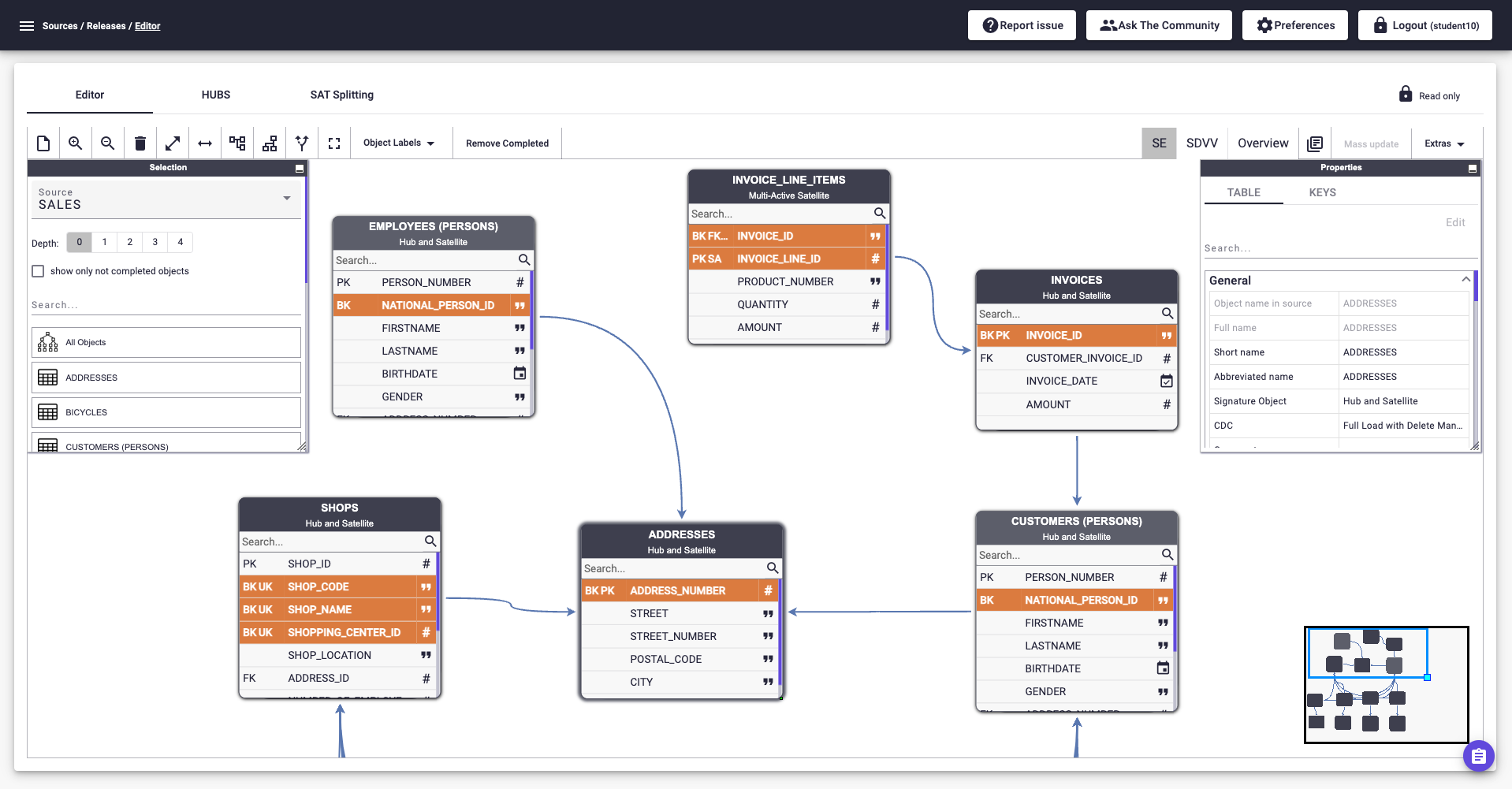

Graphical Data Vault modeling interface for dbt

dbt is popular amongst data engineers, thanks to its modular SQL capabilities and open-source community. The dbt tool is a data transformation tool that enables data engineers to transform, test and document data in the cloud data warehouse. The skills required are SQL, version control and data modeling. But ‘data modeling’ can mean different things.In dbt, the term model is commonly used to refer to such a modular SQL statement. It’s a piece of modular SQL code that describes both (1) how a source element is transformed into a target element and (2) the source and target details. This statement is stored in dbt’s repository and can take different shapes on the target platform: a view, a table, incremental or ephemeral.

In Data Warehousing on the other hand, the term data model refers to an abstract model that organizes multiple elements of data and explains how they relate to each other. So, by definition, it describes multiple elements. There are different kinds of data models:

- conceptual: the highest level at which you describe all data elements and their relations

- logical: a more detailed description of these elements regardless of the database management system

- physical: adds technical detail to the logical model to implement it on a physical database.

You can see why the dbt definition of a model might lead to confusion! In fact, a dbt model uses the (generic) source and target definitions of any data model. So even before a data engineer starts building a dbt model, they’ve already (consciously or not) thought about the data model.

Source data model in VaultSpeed

That brings us to the main reason why we support dbt as a target for deploying Data Vault transformation code. Automation must be data-driven, but this is not enough.

- VaultSpeed encourages users to build their Data Vault model the right way. It enables users to see and enrich source and target model metadata through a comprehensible GUI. It then automatically generates all necessary code for you – based on that metadata – so you don’t need to code it yourself.

- dbt acts as a platform to store, version and run these generated modular SQL scripts. dbt documentation features such as key definitions, data lineage and dependency alerts make the solution ideal in a Data Vault context.

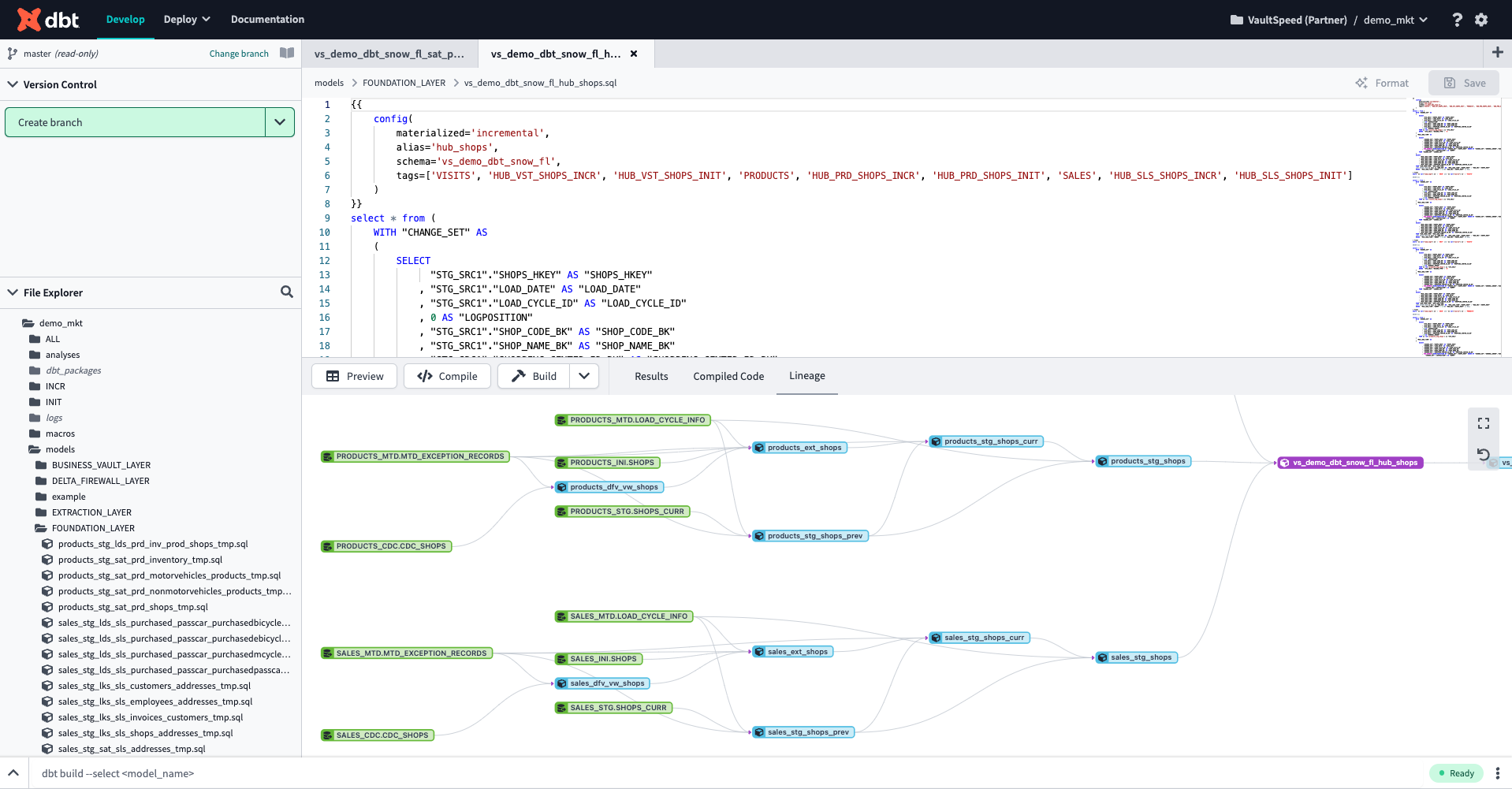

Generated dbt model for a hub and corresponding lineage

dbt support is limited to Snowflake in this first release. Support for Databricks and Azure Synapse is on the horizon.

To get a better idea of what the integration looks like, watch this video.

Watch the demo

Platform agnostic workflows

VaultSpeed’s FMC (Flow Management Control) module allows users to automate workflows and scheduling for market-leading schedulers such as Apache Airflow or Azure Data Factory. But what if you’re using a different scheduler, or you want to use your target platform’s native capabilities to orchestrate your data loads?

Generic FMC will allow you to generate generic workflows that can be implemented into any scheduler or workflow management solution.

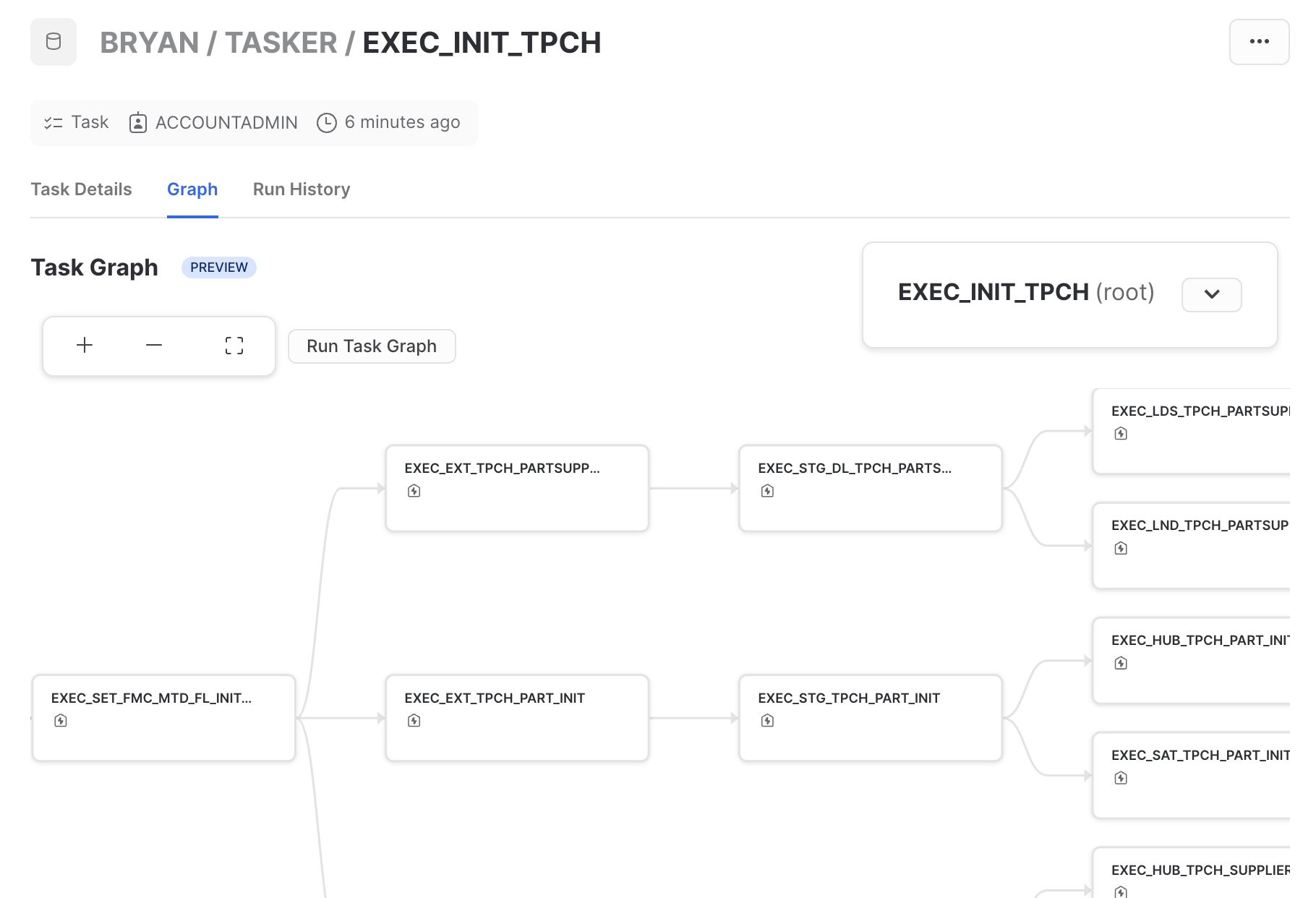

FMC will generate JSON files containing all the data needed to build your own FMC implementation. All the logic to calculate and keep track of the loading windows is handled by procedures generated by VaultSpeed. The only thing you need to set up is a process that executes these procedures in the right order. You‘ll also need to handle metadata such as the load date and the load cycle id.

Find more details in the Docs portal. And get inspired by this community blog post on how to build a workflow solution natively on Snowflake using our generic FMC module.

Workflows built using Snowflake native features only

Matillion for Databricks

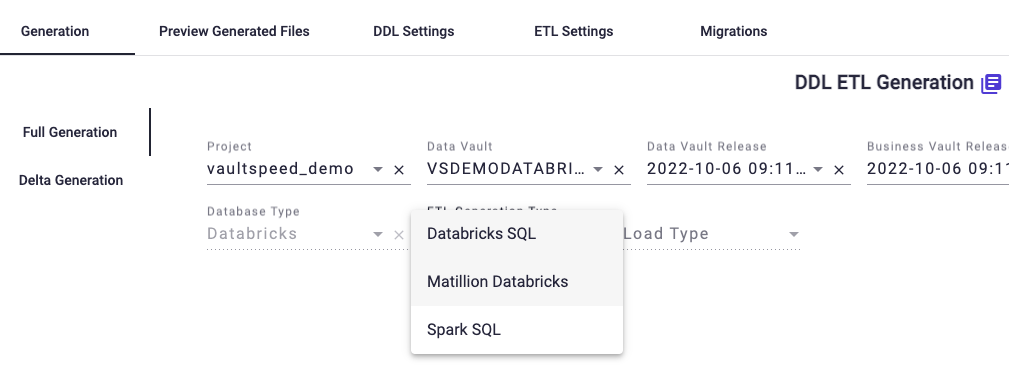

On the ETL side, we’ve added support to run Matillion on Databricks. VaultSpeed now generates Matillion ETL code for the lakehouse.

Matillion users can automate the mappings that load data in the Data Vault area (Silver layer) and focus on tailormade transformations in the Gold layer of the lakehouse.

Adding Databricks as a target, our current support for Matillion now covers Databricks, Snowflake and Azure Synapse. Using generic FMC, described above, enables you to automate your Matillion workflows end to end on all 3 platforms as well.

Generate Matillion Databricks code

Enhanced templating capabilities for the presentation area

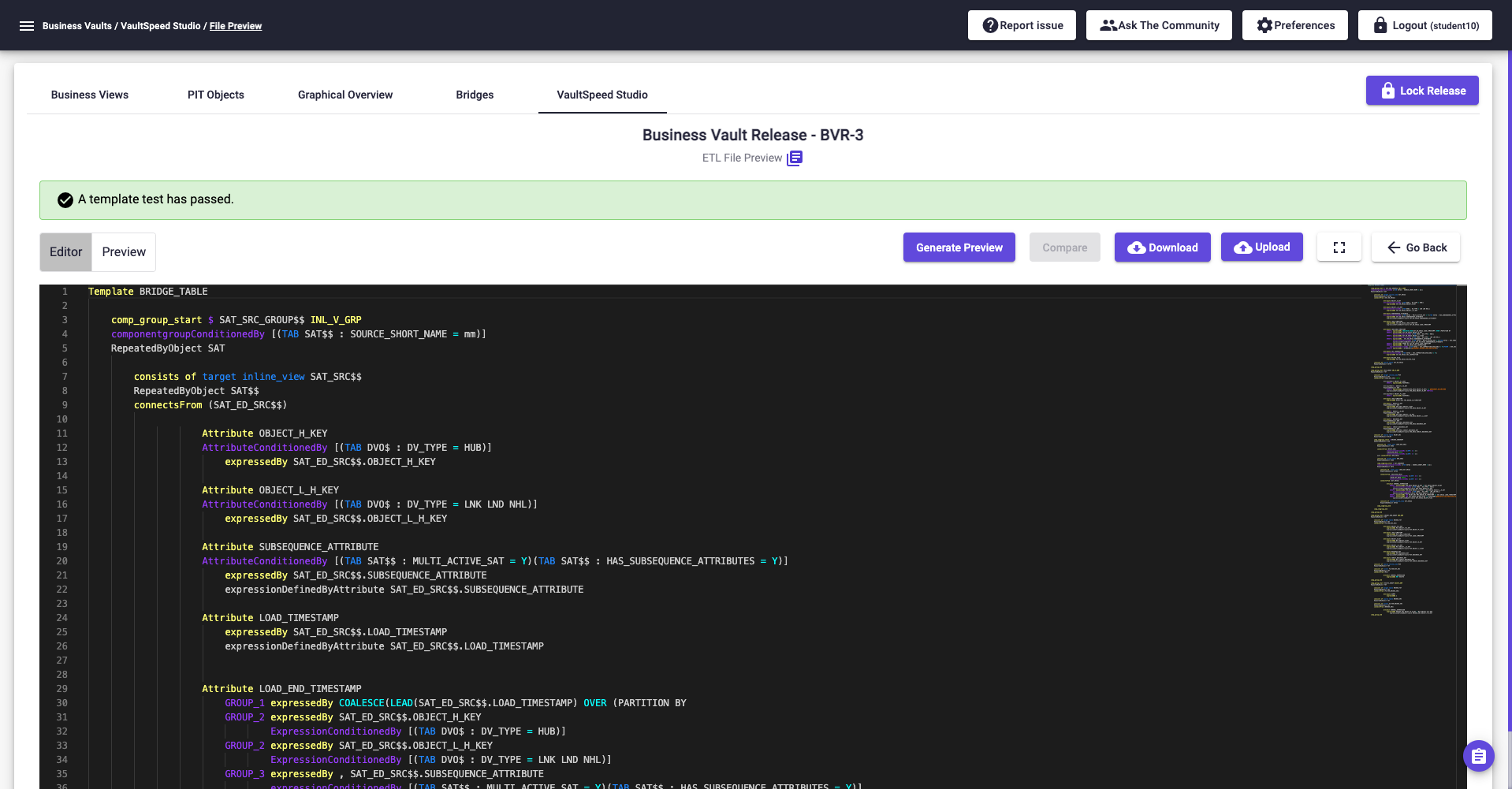

Our VaultSpeed Studio addon module got a major upgrade in Release 5.2. In Release 5.3, we introduce new features that enable you to build more sophisticated business templates.

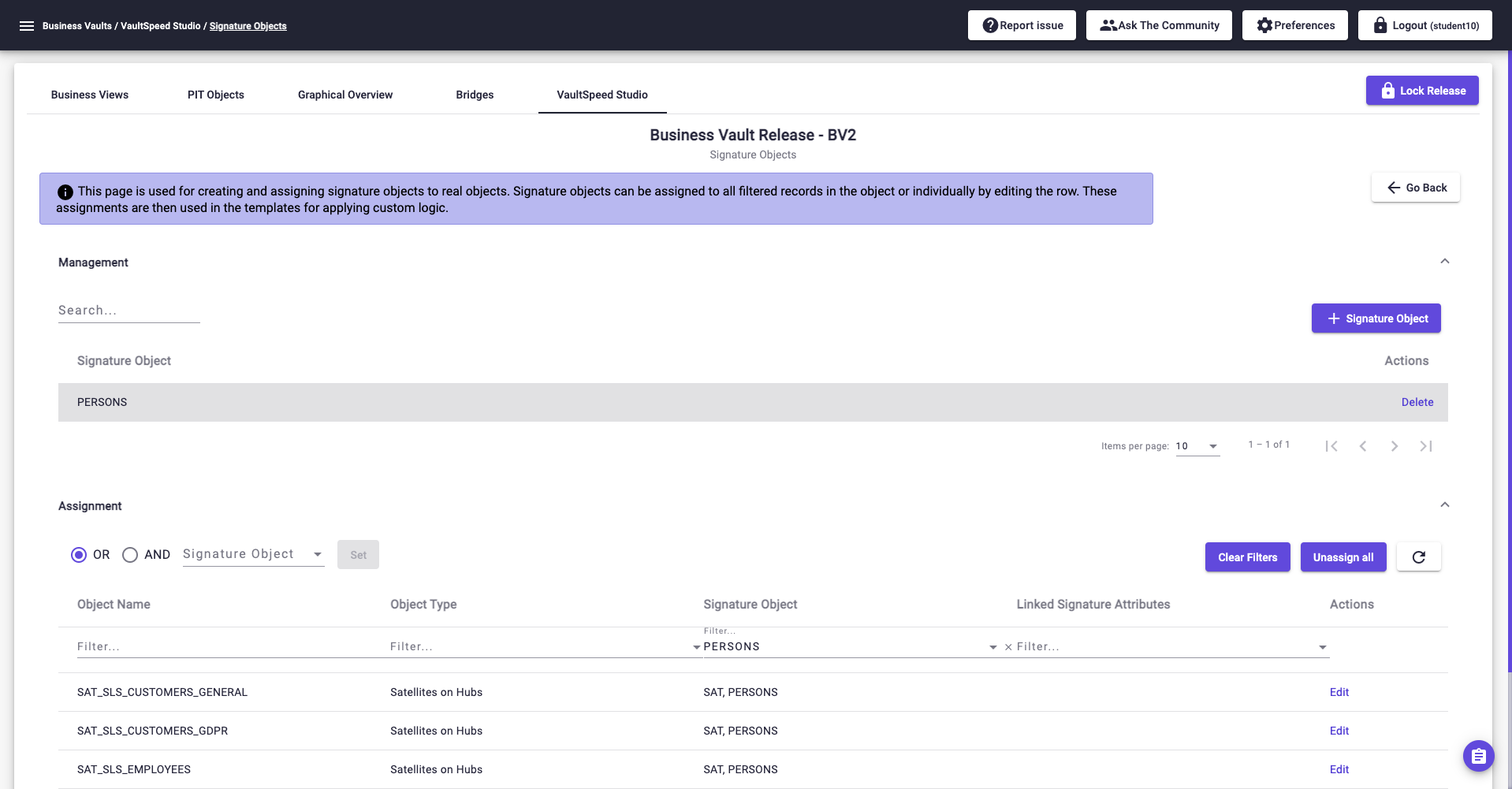

- Define your own custom signature objects. For example, set a specific signature on all RDV satellites that contain interest rate data and treat its metrics differently.

Signature object screen

- Use PIT (Point in Time) tables as a base type for your templates, making it easier to build virtual dimensions.

- Multi-level loops: when looping over dependent objects, choose the level at which you want to perform a loop — for example, when building a bridge template. You can loop all dependent hubs of a bridge and subsequently loop over all dependent satellites on those hubs. Depth level in the hierarchy is indicated by $. HUB$ and SAT$$ in the example case.

- Use new condition types to condition a loop.

- TAB parameter 'TABLE_NAME': (TAB SAT$ : TABLE_NAME = SAT_MS_CUSTOMERS)

- TAB parameter 'SIGNATURE_OBJECT': (TAB SAT$ : SIGNATURE_OBJECT = GDPR_SAT)

- Like condition operator for all conditions: (TAB SAT$ : TABLE_NAME LIKE SAT_MS%

More details and advanced examples of the improved templating capabilities can be found in the docs.

New template capabilities like multi-level looping and new condition types applied in an example for a bridge table

Other changes

In addition to the major features already listed, Release 5.3 brings some valuable enhancements – often based on customer requests – to improve the overall experience. Details can be found in change log.

- Deletes in non-historical links: when a transaction is deleted in the source, you can choose to insert a record in the NHL that nullifies the original transaction.

- Support for multi-path bridges: for example, think of a bridge (or dependent fact table) that needs to include both invoice and delivery address keys.

- The new UI is now generally available: for a refresher, you can watch this recorded webinar.

- When an object in the source gets renamed, VaultSpeed detects the previous name as deleted and the new name as a new object. Now you can link this new name to the old one.

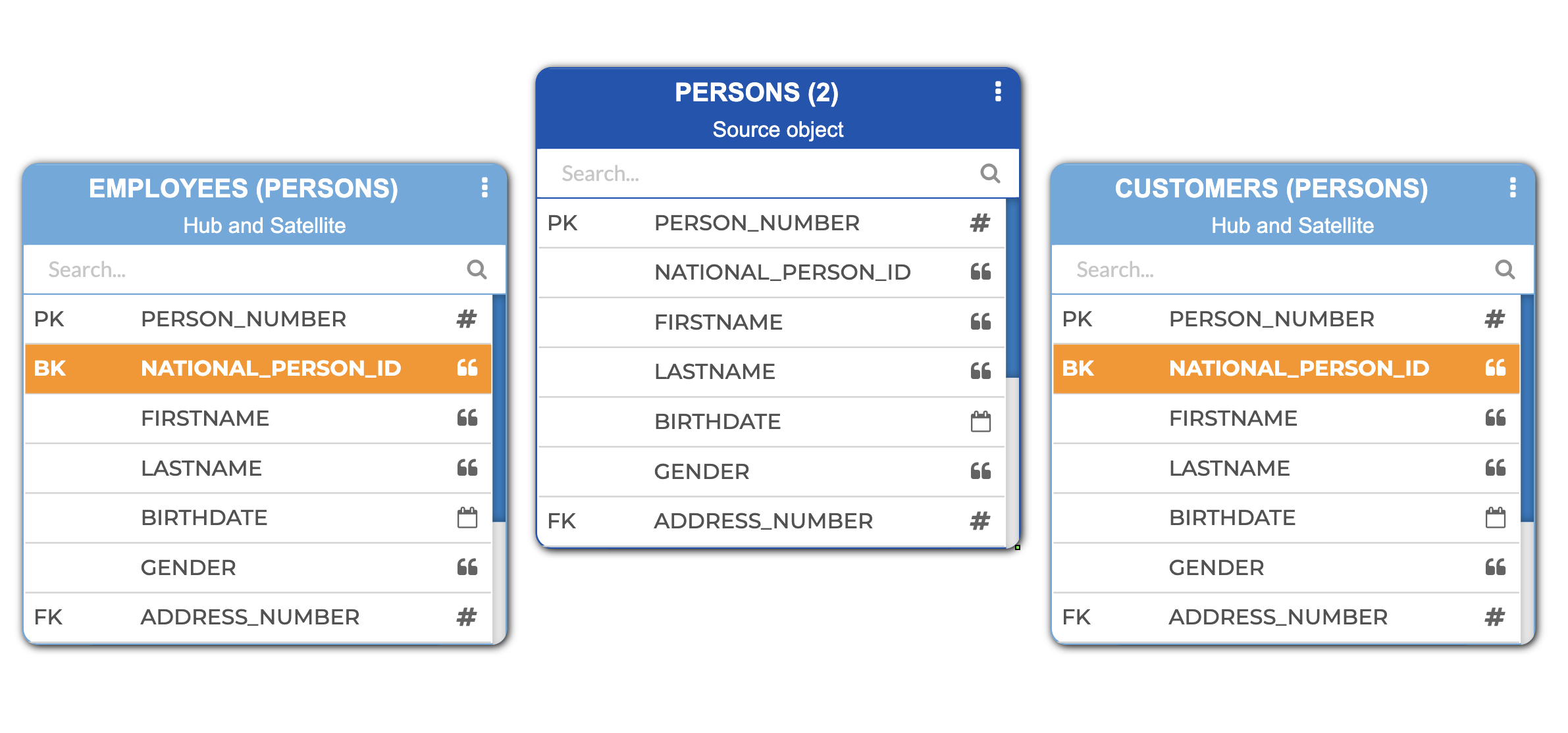

- Support for intra-source merged master.

- We renamed and added some CDC (Change Data Capture) types.

- You can now use different time zones in the FMC and the target for the load dates when the load date is not time-zone aware (TIME_ZONE_LOCAL = Y).

- We added support for Airflow version 2.3.0.

- We added support for SHA256 hashing for Snowflake and BigQuery.

- New API endpoints. Read more